How I started my home lab, what kind of equipment I bought, at what price. We will also look at my network topology and the service I run on the dug

How it all started

At the beginning of 2024, I found the Reddit community /r/homelab, which focuses on people building their data centers (mostly at home, but some collocated them at local data centers). These data centers range from one Raspberry Pi to many 42-unit server rack cabinets in a basement (although those tend to be forwarded to /r/HomeDataCenter for bigger setups).

These communities really got me excited as they tap into topics I'm really excited about, although I haven't had the opportunity to do much infrastructure work in recent years.

For those who don't know me, I have played a lot with Kubernetes (managed K8s, K3s, Rancher, etc.), Ansible, Terraform, and many other infrastructure technologies in the past. I spent much time building a PAAS on top of Kubernetes to host a WordPress website, but I decided to scrap it. These experiences were fun and taught me a lot, so finding these communities fired up that passion again.

So, I decided to start buying servers and setting them up conveniently to host applications safely and quickly.

I only bought second-hand computers

I started with a second-hand Lenovo ThinkCentre M910Q, mainly because it looked good and was cheap. My idea was to buy the cheapest computer (yet performant) I could find and see if I wanted to invest time into this not-so-new hobby.

I loved it. It was small, silent, and fun, reviving a slightly faded passion for infrastructure and tech DIY. Also, it was only 420 AED (or 115 USD). A couple of weeks later, I bought another identical one. They both came with 8 GB RAM and 250 SSD each; so far, I have upgraded the RAM to 32 Gig each and will soon upgrade the SSD to 1 TB (Don't tell my wife 😉).

A month later, I made my next purchase: three Dell OptiPlex 3040s, each 345 AED (94 USD).

These were not as impressive as I had wished, but they did the job. For example, they can only go as high as 16Gb of RAM; I wrongly assumed they could handle more.

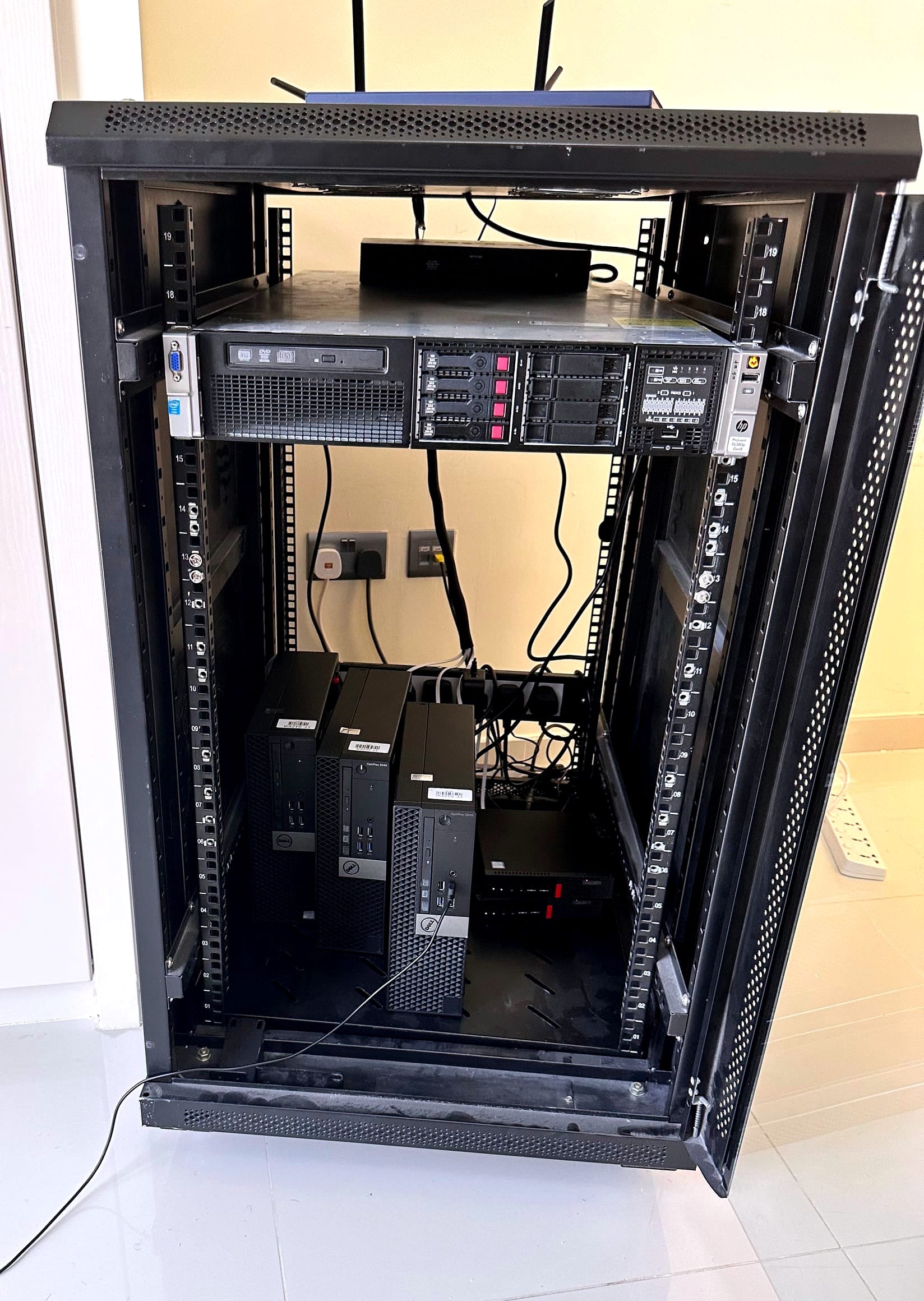

I didn't stop there, so I purchased a couple more items on dubizzle.com from a company upgrading its server farm. I got all these for 1200 AED (326 USD) :

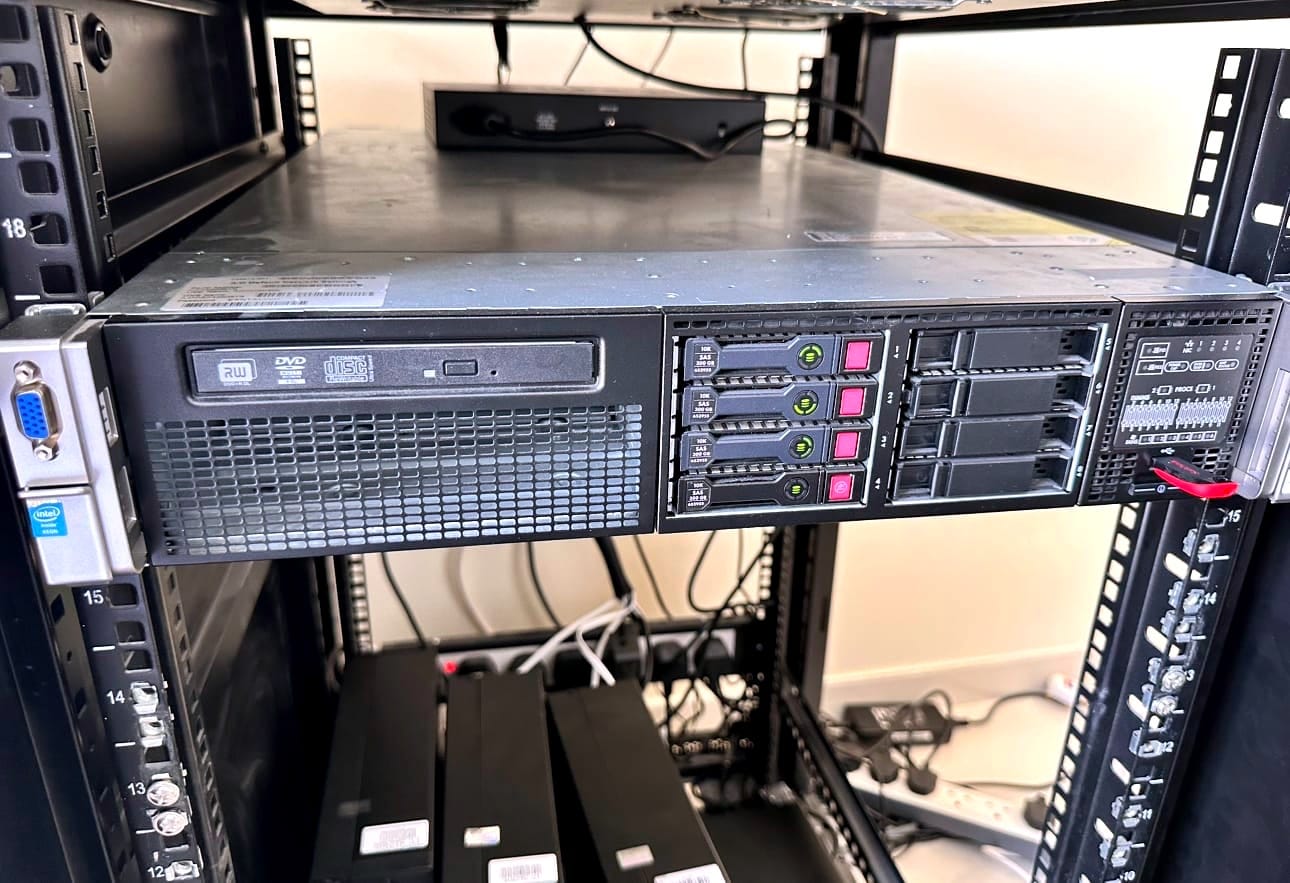

a HP Proliant Gen 8 server (not yet running it, so I will not talk about it here, except that it is super heavy).

A Gigabit Switch with 24 ports so I could connect all these servers using short RJ45 for better cable management (although there is still a lot of work).

and a beautiful 19 U server cabinet :

I think that was a great deal!

My final additions (so far) are:

- I am renting free VMs on Oracle Cloud so they can serve as my edge servers for my personal CDN and as Firewalls by obfuscating my home IP.

- I revamped (enough) my old gaming laptop and added it to the home lab to use its GPU to host Ollama and take advantage of its RAM (24Gb) and CPU to add computing to the lab. I had to tweak the Ubuntu configuration so it doesn't shut down when the lid is closed.

How is it set up today?

Network

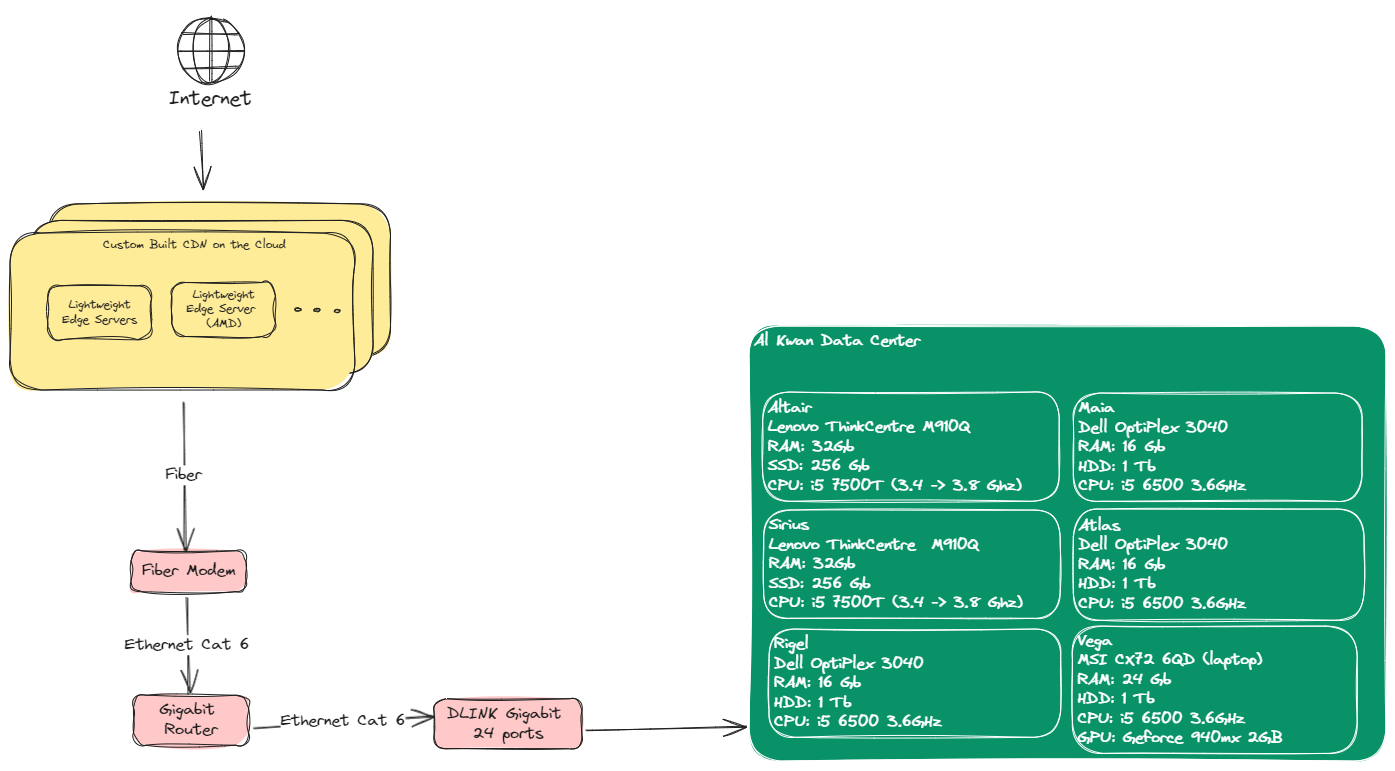

My home lab consists of bare metal machines sitting at home, and VMs in the cloud act as CDN and Egress/Ingress nodes for me. I have decided to add these VMs to protect myself from certain failure modes.

One fascinating scenario is if I must move to another house or country. I can spin up nodes on the cloud, add them to my cluster, slowly drain the workload, point my CDN to that cluster, and then do the reverse operation when I'm settled in a new place without disrupting my DNS record or SSL certificate.

The setup looks like this:

Workload

I have decided to go all in with Kubernetes for everything, more precisely, K3s, the lightweight version of Kubernetes built by Rancher Labs (now part of Suse). I am pretty familiar with Kubernetes, so this choice was natural for me, and it allowed me to do many cool things very quickly.

CI & CD

At the start of each project, I set up a Dockerfile configuration and started building the app location on docker or with docker-compose. It is second nature to me now.

I run my CI on GitHub workflows, including code build, docker image build, tests, and sometimes performance benchmarks (e.g., benchmarks for my open-source database Loggerhead)

I have implemented GitOps, which means I have a repository that holds all the K8s configurations. When I build a new Docker image, my CI automatically updates the definition files that use that image to the new version.

Then, I installed ArgoCD on my cluster: ArgoCD listens to changes in that repository and applies them to my cluster. It also ensures that my cluster is consistently in the right state (in theory).

Database and other services

I run RabbitMQ for async communication and queueing between my services. RabbitMQ is hosted on K3s, too. So far, there is one node, but I will soon set up a high-availability cluster with three nodes.

I am also considering Kafka and Nats.io, but so far, RabbitMQ has won because I am familiar with it.

I host Redis for caching and geolocation data (I'm phasing this use case out now that I have built Loggerhead, https://github.com/fabricekabongo/loggerhead).

I also run Postgres on Kubernetes with the CNPG (Cloud Native Postgres) controller. It spins up Postgres instances for me, sets up read and write nodes, and takes care of disaster recovery, backups, etc. It is great.

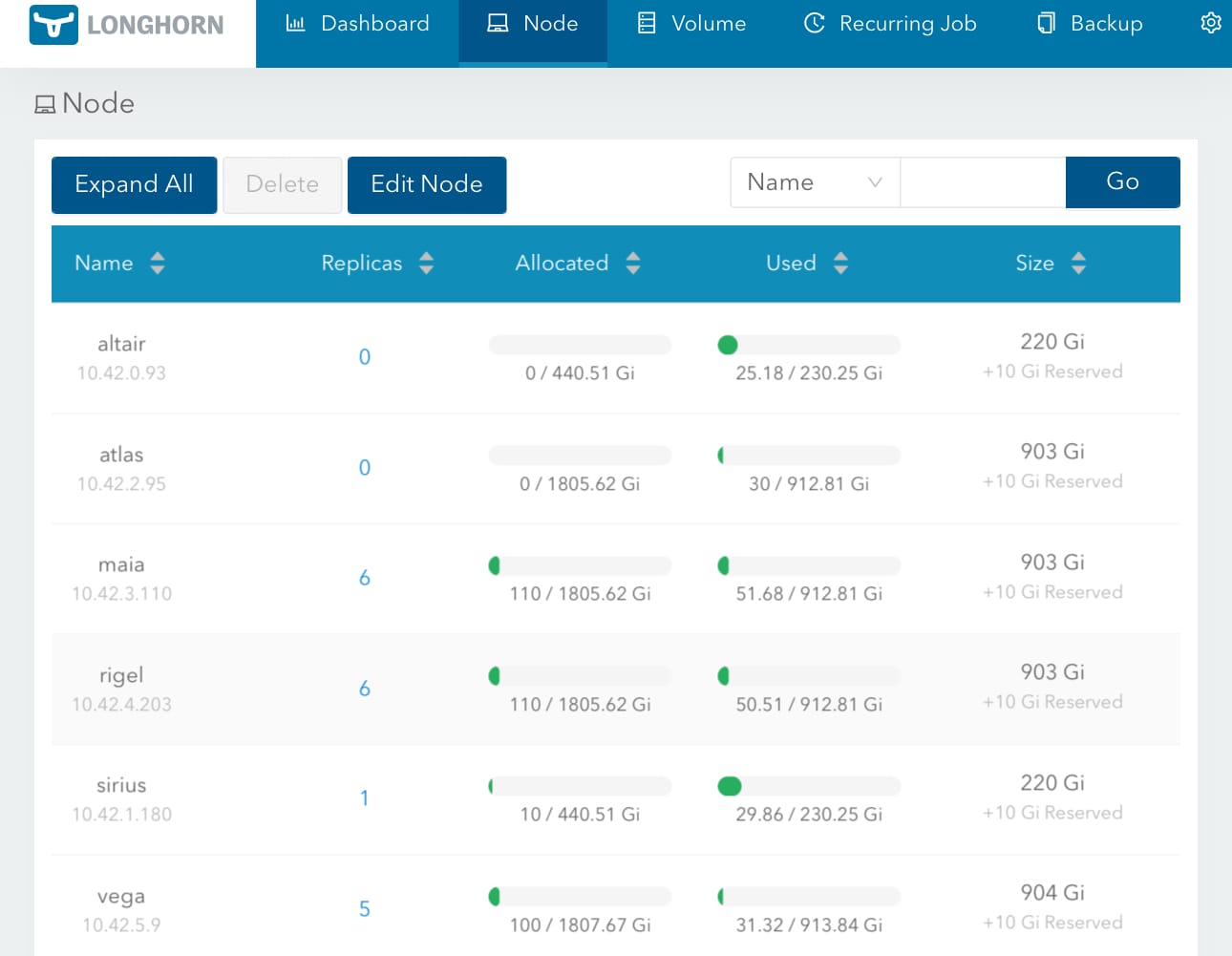

so with CNPG + longhorn I have 9 replica of the data, a bit of a waste. I’ll maybe change this.

Load Balancer

K3s comes with Traefik as an Ingress controller, which allows me to link a K3s service with a domain name and request an SSL certificate automatically from Letsencrypt using CertManager. This process is fast and convenient.

Storage

I use Longhorn (Another product by RancherLabs) to provide persistent storage to containers without having to be static services. Longhorn is set up to:

- I always have three replicas of every volume I set up.

- Snapshots are made multiple times daily, making it easy to recover quickly. Also, full backups run once daily.

- I stored the backups on Digital Ocean spaces (S3 equivalent) but I’m looking for cheaper alternatives.

- I have set 10 Gig per node as reserved storage so the OS has space even if the machine is full.

- I also over-provisioned it to 200%, so if I allocate 10 Gb storage to a service but it only uses 2, I have give the other 8 to another service. Per default, this is not possible and you will have a lot of waste.

What's next?

The very next things that I want to do are as follows:

- Split the home lab into two and split some machines on the ground floor to ensure the entire lab cannot be turned off by mistake.

- I want to buy UPSs for the servers and the network gear, including the modem, so even if there is a full outage, my servers will stay up for a couple of hours.

- Set up and upgrade the large Dell Proliant server until it has two CPUs, 735 Gig of RAM, and 6Tb of storage.

- Expand my CDN to the main countries where my readers are present, namely:

- UAE (27.1%) [CDN already in place]

- USA (21.4%) [Next location]

- Egypt (6%) [Interesting challenge]

- India (4.4%) [After the US]

- DRC (4.1%) [No cloud provider]

- Germany (3.4%)

- UK (2.7%)

- rest (~30%)

- I will set up a lightweight monitoring interface that I will make public so anyone can monitor the health of my home lab and each node, etc. I'm still clarifying the idea; I will come to you about it when I have more clarity.

Conclusion

Writing this article was fun (and long), and setting up and maintaining my home lab is even more fun! I recommend all of you set up a "play" area where you get to explore and try out things you wouldn't do in a work environment due to risk or other reasons.

Comments ()